- On this page

- Understanding AI governance: the key to data privacy

- Securing AI-driven apps: a must for data privacy

- Data protection in AI: a comprehensive approach

- AI adoption security: a new frontier in data privacy

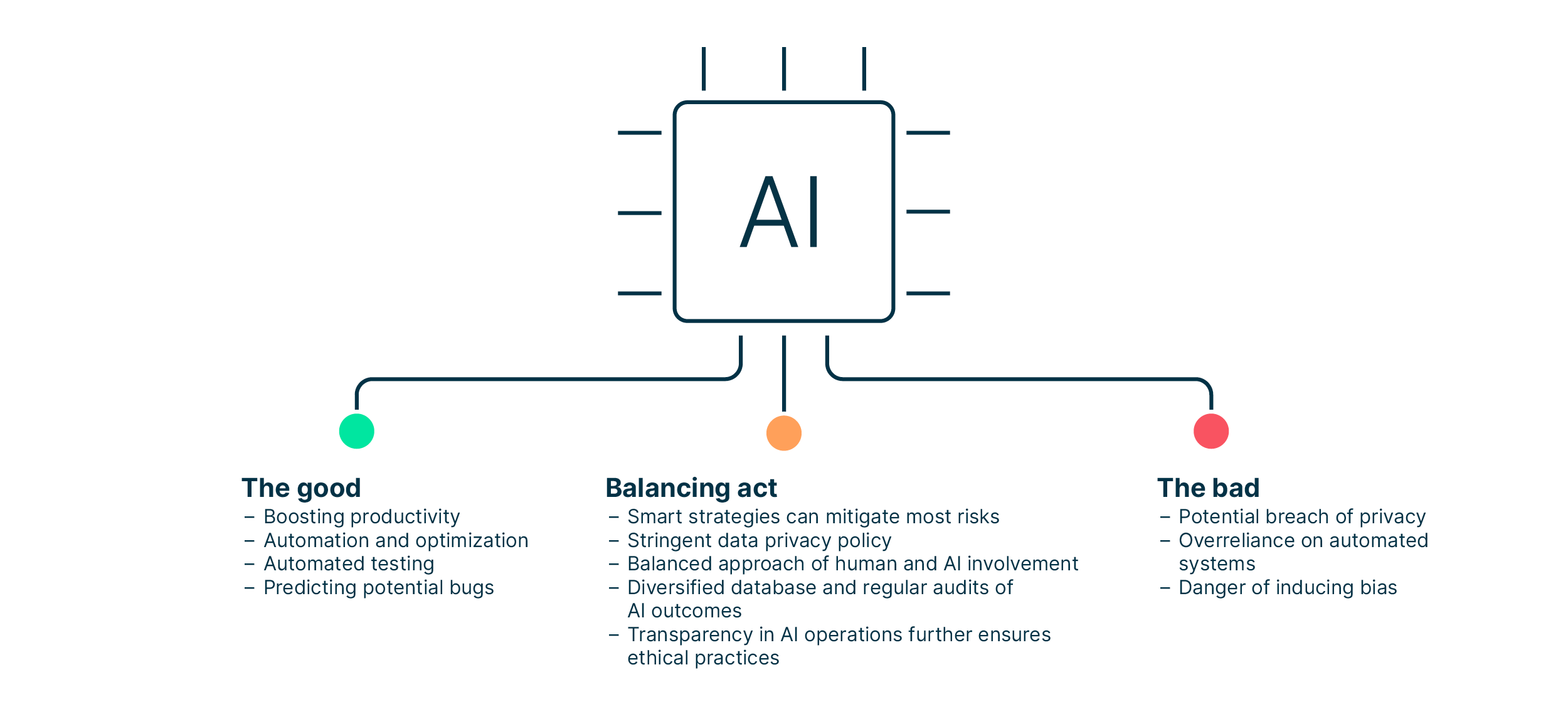

- AI in software development: the good, the bad, and the balancing act

- What to keep in mind

In the age of artificial intelligence (AI), prioritizing privacy has become more crucial than ever. According to statistics, one in three people are concerned about their data when they are navigating in the digital world. Data protection is therefore non-negotiable, but AI can no longer be ignored. How can this potential contradiction be solved?

We show how AI governance contributes to the protection of privacy, how it can be integrated into software development and how programmers who use AI can ensure «privacy by design and default» in an application. Plus, we list the most common security threats to AI-augmented apps and provide countermeasures. Of course, there is also a comparison of the advantages and disadvantages of AI-supported software development, and more.

Understanding AI governance: the key to data privacy

Learn what AI governance is about, the role it plays in ensuring data privacy, and what best practices to follow when implementing it in your software development process.

What is AI governance?

Think of AI governance as the system of policies and procedures that determine how AI features are employed and monitored. It provides a legal framework that guides the utilization of AI technologies, ensuring ethical, lawful, and efficient outcomes. The aim is to close the gap that exists between accountability and ethics in technological advancement.

Among other things, AI governance addresses AI algorithms, security, and data privacy.

The role of AI governance in ensuring data privacy

One in three individuals are concerned about their online privacy. Therefore, data privacy is non-negotiable. And even more of a challenge when AI comes into play.

AI governance thus plays a key role. It balances the creativity of AI in software development with data privacy, ensuring software engineers adhere to the rules when they interact with personal data in the development process. What’s more: A robust AI governance framework enhances trust amongst users and customers.

Best practices for implementing AI governance in software development

When incorporating AI governance into your software development cycle, make sure to follow these best practices:

| Best practices for implementing AI governance |

|

1. Data privacy |

|

2. Bias identification |

|

3. Security |

|

4. Accountability |

|

5. Transparency |

|

6. Training |

|

7. Continuous improvement |

-

Data privacy

Adhere to data protection regulations such as GDPR and FADP and ensure consent, anonymization, and secure handling of sensitive data.

-

Bias identification

Ensure high-quality and diverse datasets to avoid biases in AI models. Continuously assess models for biases, particularly concerning gender, race, or other sensitive attributes. - Security

Implement robust security measures to protect AI models and the data they process from cyber threats. - Accountability

Establish mechanisms to hold individuals and teams accountable for the ethical use of AI systems. - Transparency

Communicate transparently about the use of AI in software development to clients and partners, addressing concerns about data usage, decision-making, and privacy. - Training

Provide regular training on AI ethics, governance, and best practices for employees involved in AI-assisted development. - Continuous improvement

Establish feedback mechanisms to gather insights from clients and partners, allowing for improvements in AI models and governance practices.

Implementing AI governance in software development is like building a safety net. It’s there to catch any issues that might violate data privacy or integrity.

Securing AI-driven apps: a must for data privacy

As AI-driven applications rely on models that contain valuable and often sensitive information, it comes as no surprise that they are facing multiple security threats.

The importance of security in AI-driven apps

Securing data in AI-driven applications is paramount as data breaches across sectors show. Their unique attributes, such as in-depth user interactions, sensitive information, and powerful predictive capabilities, make them enticing for hacker exploits.

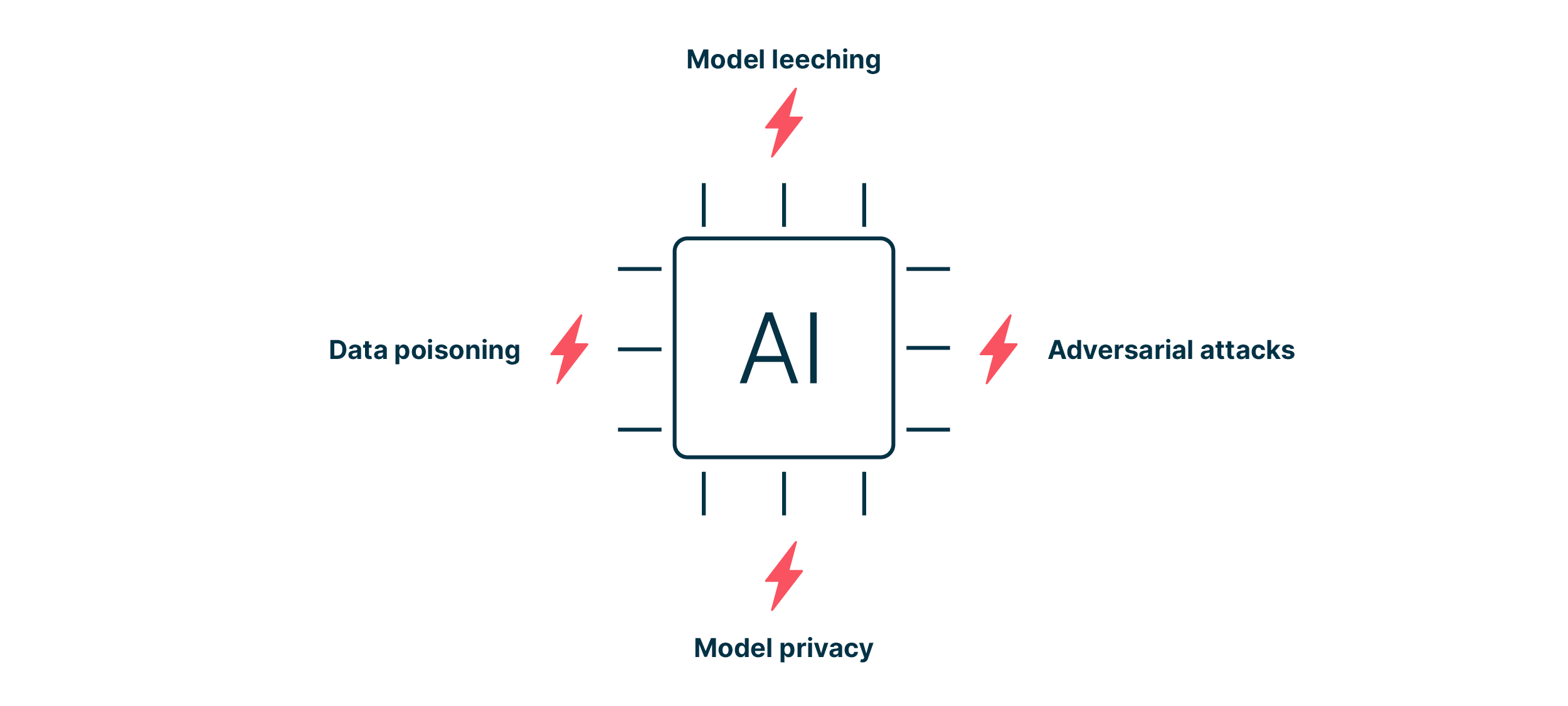

Common security threats in AI-driven apps

Just as with any software system, AI-driven applications have unique security challenges. Here are a couple worth noting:

Data poisoning

With data poisoning, an attacker manipulates training data to misguide AI algorithms. As a result, AI-driven apps may make wrong predictions or conclusions, negatively impacting decision-making processes and potentially leading to serious consequences.

Example: In 2016, Microsoft’s Tay bot on Twitter quickly started to interact in a socially unacceptable fashion learning from conversations with other users.

Adversarial attacks

Adversarial attacks are designed to deceive AI algorithms through subtly modified input data. Even slight modifications, invisible to the human eye, can trick AI systems into misclassifying information.

Example: A small modification, such as a post it on a stop sign, can lead to wrong interpretation of road signs by a car autopilot and have a disastrous impact.

Model leeching

Model leeching enables an attacker to copy chunks of data of large language models (LLM) and to use the information gained to launch targeted attacks. It works by asking the LLM a set of targeted prompts so that the LLM discloses insightful information giving away how the model works.

Model privacy

With a model privacy attack, a hacker tries to obtain information about the training data of the model itself. Data sets can constitute an important intellectual property asset that is sensitive (such as personal healthcare data). In addition, attackers might steal the model itself. By testing the model that was developed over years with enough queries and examples, they may be able to rebuild a model in no time.

Example: An attacker can guess if certain data was used for training and even roughly reconstitute complex training data like faces.

Is AI thus too much of a risk to take advantage of it?

No. Understanding the threats is a major step towards fortifying your AI software against potential security breaches. And there are ways to enhance security.

Strategies for enhancing security in AI-driven apps

To mitigate the risks and possible fallout from the explained threats, the following security strategies may prove beneficial:

| Strategies for enhancing AI-app security |

| 1. Data encryption |

| 2. Data anonymization and minimization |

| 3. Model security |

| 4. Access control |

| 5. Robust authentication |

| 6. Monitoring and logging |

| 7. Regulatory compliance |

| 8. Regular security audits and assessments |

| 9. Regular updates |

- Data encryption

Employ strong encryption methods to protect data at rest and in transit. This ensures that sensitive information used in AI models remains secure. - Data anonymization and minimization

Anonymize sensitive data used in AI models whenever possible to reduce the risk of exposing personally identifiable information (PII). - Model security

Protect AI models by applying techniques like model watermarking, differential privacy, and federated learning to prevent model theft, tampering, or bias exploitation. - Access control

Implement strict access controls to limit who can interact with the AI system and the data it handles. Role-based access control (RBAC) can help in managing permissions effectively. - Robust authentication

Enforce strong authentication mechanisms like multi-factor authentication (MFA) to prevent unauthorized access to the AI app or its data. - Monitoring and logging

Implement comprehensive logging mechanisms to track system behavior and user activities. Real-time monitoring helps in detecting anomalies and potential security breaches. - Regulatory compliance

Ensure compliance with relevant data protection regulations (e.g., GDPR, Swiss FADP) and industry standards to maintain legal and ethical obligations regarding user data. - Regular security audits and assessments

Conduct routine security audits and assessments to identify weaknesses and gaps in the system. Penetration testing can help simulate real-world attacks to evaluate defenses. - Regular updates

Keep AI frameworks, libraries, and underlying software updated with the latest security patches to mitigate known vulnerabilities.

By integrating these strategies into the development and maintenance of AI-driven apps, it's possible to strengthen their security posture and better protect sensitive data and functionalities.

Always remember: Maintaining AI software and ensuring data privacy is a continuous, evolving process.

Data protection in AI: a comprehensive approach

AI’s ability to process high volumes of – often sensitive – data within seconds makes it a preferred target for hackers. Therefore, an in-depth understanding of data protection in this area is key and requires a comprehensive approach.

Understanding the concept of data protection in AI

Data protection in AI goes beyond general data privacy. Primarily, the data protection measures in AI aim to safeguard sensitive data from unauthorized access and breaches. It goes a notch higher by ensuring no data manipulation occurs when processing this sensitive data within AI models. The growth of AI technology has inherently boosted the demand for stringent data protection measures.

These measures focus on:

- data anonymization techniques that play an instrumental role in preserving data privacy as it disguises sensitive data in a way that it can be used in various AI models while mitigating the risk of a data breach

- implementing high-security encryption methods and sophisticated access control systems

- risk analysis and regular audits as complementary practices that add an extra layer of security

Intertwining data protection measures with AI doesn't mean stifling the technology. Rather, it's a proactive approach that empowers companies to unlock AI's full potential with minimum risks.

The role of data protection in AI software development

AI-augmented software development and data protection go hand in hand. A well-rounded AI model is one that puts data privacy at its core. As a major strength of AI lies in its ability to process and learn from large data sets, focusing on how this data is protected is vital.

In a nutshell, data protection in AI-powered software development means using AI models that are «secure by design». This not only avoids patching vulnerabilities that appear later, but also reduces the likelihood of neglecting any fundamental security measures. In addition, it helps in early detection of any potential security threats.

Important: The role of data protection in AI-augmented software development goes beyond technical aspects. It determines a company's reputation and trustworthiness, as data privacy is a moving force for potential partnerships and client acquisition.

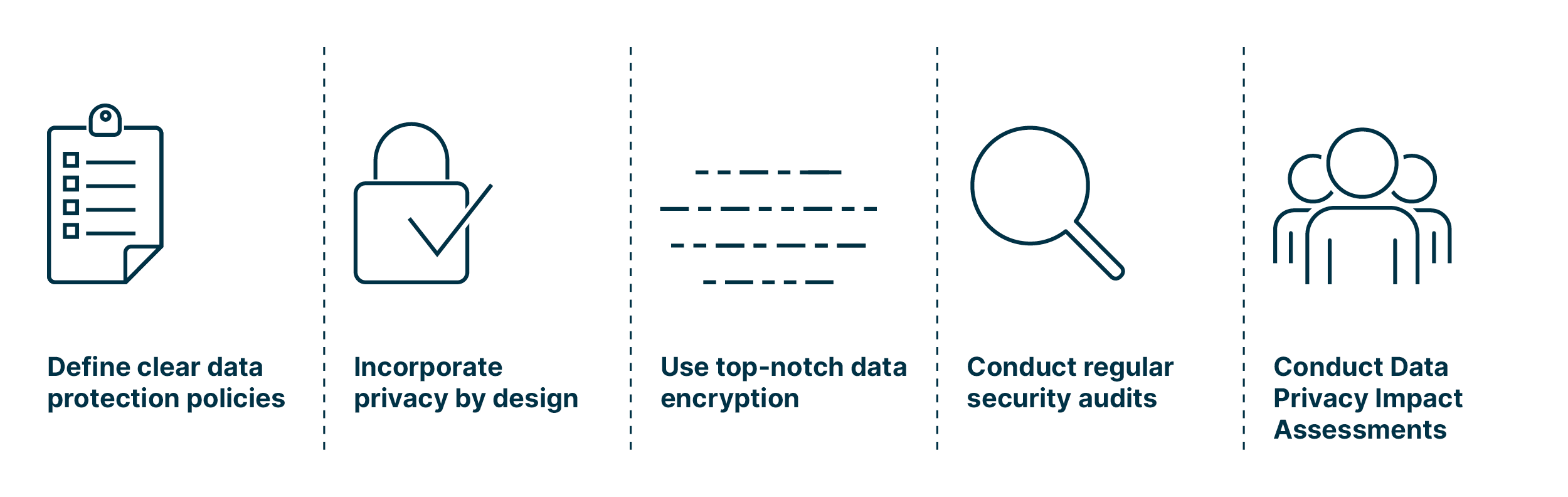

Best practices for ensuring data protection in AI

As AI continues to dominate the tech world, companies need to shape their data privacy measures accordingly. Here are five best practices to ace data protection in AI:

- Define clear data protection policies from the onset

Establishing crystal-clear data protection guidelines helps everyone comprehend the seriousness of data privacy. Make sure to communicate these policies across all departments. - Incorporate privacy by design and default in AI development

Privacy should not be an afterthought in the AI-augmented development process. Instead, programmers need to prioritize it right from the design phase. - Use top-notch data encryption techniques

Utilizing state-of-the-art encryption methods significantly reduces the risk of data breaches. - Conduct regular security audits

Having periodic security audits helps detect any potential vulnerabilities in the system and offers insights into improving the overall security. - Conduct Data Protection Impact Assessments (DPIA)

DPIA helps organizations identify, assess, and mitigate risks in processing personal data, especially for projects involving new technologies or sensitive data with high risks for individuals’ privacy. Often mandated by data protection regulations like GDPR.

Keep in mind that adopting best practices is not a one-time measure. Constant updates and improvements are necessary to keep pace with evolving AI technology and security threats.

AI adoption security: a new frontier in data privacy

AI adoption security is a vital area of concern that directly impacts data privacy. This chapter sheds some light on the challenges organizations are facing when they strive to safeguard data during AI adoption. Plus, it lists a number of strategies to leverage in order to enhance security throughout AI adoption phases.

The link between AI adoption and data privacy

AI adoption is transforming industries across the globe. However, as AI usage increases, so does the volume of data it processes, creating a critical link between AI adoption and data privacy.

In an era when data breaches make the headlines, rock solid data privacy measures are key. Organizations that fail to implement such measures may face regulatory fines, customer churn, or even lasting brand damage in case of a data incident.

True: AI adoption is a balancing act. It’s about ensuring data privacy while harnessing AI’s power.

Challenges in ensuring security during AI adoption

The main crux of the matter in ensuring data privacy in the AI adoption process is the smooth alignment of two factors: the increasingly fast pace of AI development and the ever-evolving data privacy regulations. This leads to some unique challenges:

- AI systems are «data hungry», needing extensive data to become accurate and effective. Balancing this demand with individual privacy rights is a key task.

- There's the issue of AI explainability – or lack thereof. Understanding how AI systems make decisions is not always clear, especially with advanced ML models. When client data becomes a part of this «AI black box», questions about data usage, transparency, and consent arise.

- There's a technical challenge. AI systems often involve complex infrastructure, incorporating cloud repositories, IoT devices, and other network endpoints. Protecting all components of this wide-reaching surface from cyber threats calls for advanced security strategies.

|

AI adoption security |

|

| Challenges |

Strategies |

|

|

Strategies for enhancing security during AI adoption

Enhancing security and thus data privacy in AI adoption requires a blend of advanced tools, revamped processes, and a shift in mindset:

- Organizations should consider embracing privacy by design and default principles. Ensuring privacy from the minute one, rather than patching and debugging later significantly bolsters data security.

- It is recommended to invest in AI interpretability tools that can help unpack the «AI black box», promoting transparency and trust.

- Consider employing robust cybersecurity measures, such as AI-powered threat detection and prevention systems, to protect the technical infrastructure from potential breaches.

AI in software development: the good, the bad, and the balancing act

AI's integration in software development offers productivity boosts and error reduction. Yet, risks like privacy breaches demand strategic mitigation, such as a stringent privacy policy.

The good: the benefits of AI in software development

Despite the challenges of wielding new technology, the incorporation of AI in software development offers several noteworthy benefits, for example:

- AI equips developers with a range of smart tools, boosting productivity and making complicated tasks more manageable.

- Automation and optimization through AI can lead to cost savings by reducing the need for manual and repetitive tasks.

- Automated testing, powered by AI, swiftly highlights problematic areas, thus improving quality.

- AI algorithms can predict potential bugs, which prompts developers to tackle issues ahead of time.

Of course, there are many more examples of how AI can benefit software engineering.

The bad: the risks associated with AI in software development

Just as benefits, AI presents several risks and challenges:

- Potential breach of privacy: As AI systems often require extensive data to operate, the risks of mishandling, misusing, or leaking sensitive information are significantly high.

- Overreliance on automated systems: Employing AI may lead to diminishing human oversight, and undependable results might slip through, causing software failure, and damage to the organization's reputation.

- Danger of inducing bias: Given that AI systems learn from the data they process, they might perpetuate biased judgments if the input data is biased. This might lead to unethical and even unlawful decisions.

The balancing act: how to mitigate risks of AI-powered software development

Smart strategies can mitigate most risks associated with AI in software development.

It starts with ensuring a stringent data protection policy – limiting data access, enforcing encryption practices, and regularly updating security measures can safeguard against data breaches.

Additionally, a balanced approach of human and AI involvement can minimize overreliance on automated systems. Human oversight in AI operations ensures the validation of AI outputs, mitigating risks of software failure.

To tackle the problem of bias in AI outcomes, a diversified database is a starting point. Ensuring a wide variety of unbiased data in training algorithms helps produce fair and ethical AI outputs. Regular audits of AI outcomes can also unearth any hidden biases.

Transparency in AI operations further ensures ethical practices. By acknowledging AI involvement to stakeholders, you ensure they are well-informed about the potential risks and rewards associated with it.

What to keep in mind

Achieving a seamless integration of AI in software development hinges on proactive data privacy measures. AI governance acts as the cornerstone, harmonizing innovation with personal data protection through best practices and continuous improvement. Prioritizing data protection in AI, with policies, encryption, and regular audits, becomes a strategic advantage, fostering trust and reputation.

Resilience in this dynamic landscape lies in understanding, adapting, and embracing AI's opportunities while safeguarding user trust and privacy.